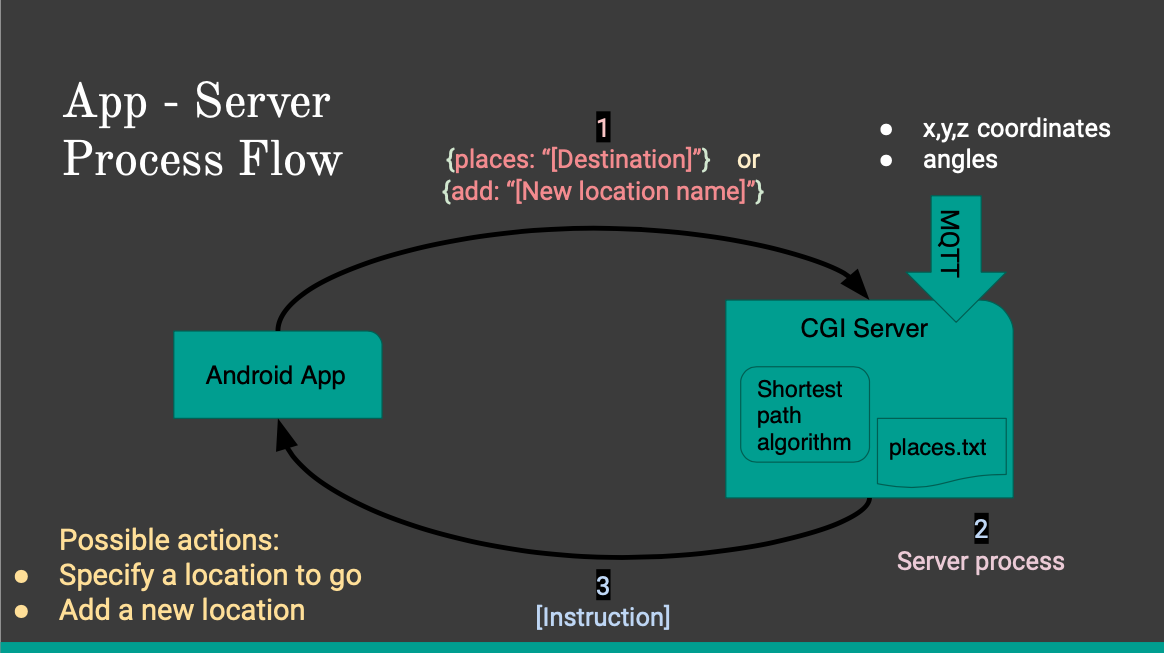

App - Server Process Flow

The Android based application enables the user to ask for a specific destination using only voice commands and it allows the user to add a new location based on the position of the tag at the moment the user issues the command. The CGI server receives either the name of the desired destination or the name of a new location. In the first case, the server searches in a file called "places.txt" for the coordinates of the location and using the actual location of the user provided by the UWB tag and BNO055, it calculates the shortest path, and in consequence, the immediate instruction the user should follow in order to start navigating towards its destination. In case the user wants to add a new location, the server obtains the coordinates of the user at that instant and stores them in the file "places.txt" with the associated name. The messages sent by the client have a JSON format and they contain only a key-value, in which the key can be either "add" or "place" and the value should be the location name. The server sends the instruction the user should follow next and the app synthesizes speech in order to tell this command to the user. Every time this commands finishes in being said, another request is sent by the app to obtain more instructions.